publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2026

-

Use the Online Network If You Can: Towards Fast and Stable Reinforcement LearningA. Hendawy , H. Metternich , T. Vincent , and 3 more authorsInternational Conference on Learning Representations (ICLR), 2026

Use the Online Network If You Can: Towards Fast and Stable Reinforcement LearningA. Hendawy , H. Metternich , T. Vincent , and 3 more authorsInternational Conference on Learning Representations (ICLR), 2026The use of target networks is a popular approach for estimating value functions in deep Reinforcement Learning (RL). While effective, the target network remains a compromise solution that preserves stability at the cost of slowly moving targets, thus delaying learning. Conversely, using the online network as a bootstrapped target is intuitively appealing, albeit well-known to lead to unstable learning. In this work, we aim to obtain the best out of both worlds by introducing a novel update rule that computes the target using the MINimum estimate between the Target and Online network, giving rise to our method, MINTO. Through this simple, yet effective modification, we show that MINTO enables faster and stable value function learning, by mitigating the potential overestimation bias of using the online network for bootstrapping. Notably, MINTO can be seamlessly integrated into a wide range of value-based and actor-critic algorithms with a negligible cost. We evaluate MINTO extensively across diverse benchmarks, spanning online and offline RL, as well as discrete and continuous action spaces. Across all benchmarks, MINTO consistently improves performance, demonstrating its broad applicability and effectiveness.

@article{Preprint, url = {https://www.arxiv.org/pdf/2510.02590}, title = {Use the Online Network If You Can: Towards Fast and Stable Reinforcement Learning}, author = {Hendawy, A. and Metternich, H. and Vincent, T. and Kallel, M. and Peters, J. and D'Eramo, C.}, journal = {International Conference on Learning Representations (ICLR)}, year = {2026}, }

2025

-

It is All Connected: Multi-Task Reinforcement Learning via Mode ConnectivityA. Hendawy , H. Metternich , J. Peters , and 2 more authorsEighteenth European Workshop on Reinforcement Learning, 2025

It is All Connected: Multi-Task Reinforcement Learning via Mode ConnectivityA. Hendawy , H. Metternich , J. Peters , and 2 more authorsEighteenth European Workshop on Reinforcement Learning, 2025Acquiring a universal policy that performs multiple tasks is a crucial building block in endowing agents with generalized capabilities. To this end, the field of Multi-Task Reinforcement Learning (MTRL) proposes sharing parameters and representations among tasks during the learning process. Still, optimizing for a single solution that is able to perform various skills remains challenging. Recent works attempt to address these challenges using a mixture of experts, though this comes at the cost of additional inference-time complexity. In this paper, we introduce STAR, a novel MTRL algorithm that leverages mode connectivity to share knowledge across single skills, while remaining parameter-efficient at deployment time. Particularly, we show that single-task policies can be linearly connected in policy parameter space to the multi-task policy, i.e., task performance is maintained throughout the linear path connecting the two policies. Our experimental evaluation demonstrates that mode connectivity at training time induces implicit regularization in the multi-task policy, surpassing related baselines on MTRL benchmarks MuJoCo and Metaworld. Furthermore, STAR achieves competitive performance even with methods that retain multiple models at inference time.

@article{EWRL, url = {https://openreview.net/pdf?id=WKfhk8wizf}, title = {It is All Connected: Multi-Task Reinforcement Learning via Mode Connectivity}, author = {Hendawy, A. and Metternich, H. and Peters, J. and Tiboni, G. and D'Eramo, C.}, journal = {Eighteenth European Workshop on Reinforcement Learning}, year = {2025}, } -

Machine Learning with Physics Knowledge for Prediction: A SurveyJ. Watson , C. Song , O. Weeger , and 8 more authorsTransactions on Machine Learning Research, 2025

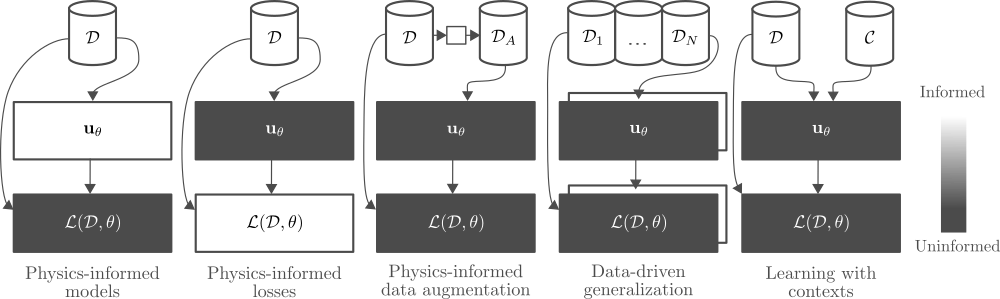

Machine Learning with Physics Knowledge for Prediction: A SurveyJ. Watson , C. Song , O. Weeger , and 8 more authorsTransactions on Machine Learning Research, 2025This survey examines the broad suite of methods and models for combining machine learning with physics knowledge for prediction and forecast, with a focus on partial differential equations. These methods have attracted significant interest due to their potential impact on advancing scientific research and industrial practices by improving predictive models with small- or large-scale datasets and expressive predictive models with useful inductive biases. The survey has two parts. The first considers incorporating physics knowledge on an architectural level through objective functions, structured predictive models, and data augmentation. The second considers data as physics knowledge, which motivates looking at multi-task, meta, and contextual learning as an alternative approach to incorporating physics knowledge in a data-driven fashion. Finally, we also provide an industrial perspective on the application of these methods and a survey of the open-source ecosystem for physics-informed machine learning.

@article{physicsMLSurvey, url = {https://arxiv.org/pdf/2408.09840}, title = {Machine Learning with Physics Knowledge for Prediction: A Survey}, author = {Watson, J. and Song, C. and Weeger, O. and Gruner, T. and Le, A. T. and Hansel, K. and Hendawy, A. and Arenz, O. and Trojak, W. and Cranmer, M. and others}, journal = {Transactions on Machine Learning Research}, year = {2025}, }

2024

-

Multi-Task Reinforcement Learning with Mixture of Orthogonal ExpertsA. Hendawy , J. Peters , and C. D’EramoInternational Conference on Learning Representations (ICLR), 2024

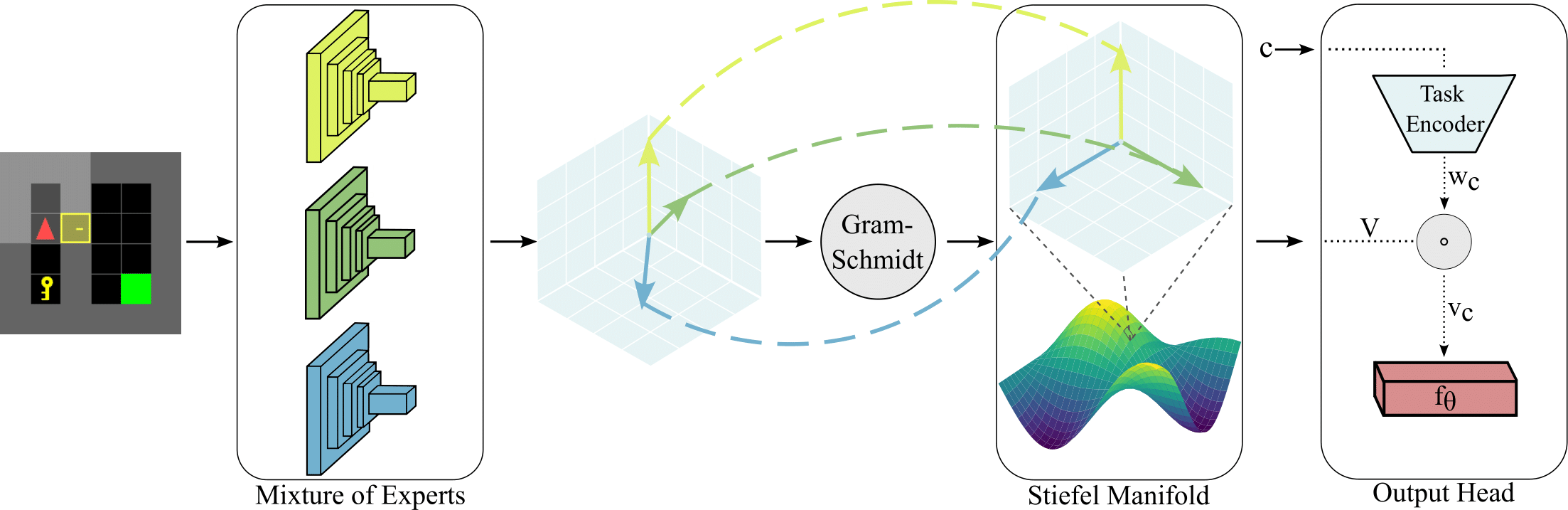

Multi-Task Reinforcement Learning with Mixture of Orthogonal ExpertsA. Hendawy , J. Peters , and C. D’EramoInternational Conference on Learning Representations (ICLR), 2024Multi-Task Reinforcement Learning (MTRL) tackles the long-standing problem of endowing agents with skills that generalize across a variety of problems. To this end, sharing representations plays a fundamental role in capturing both unique and common characteristics of the tasks. Tasks may exhibit similarities in terms of skills, objects, or physical properties while leveraging their representations eases the achievement of a universal policy. Nevertheless, the pursuit of learning a shared set of diverse representations is still an open challenge. In this paper, we introduce a novel approach for representation learning in MTRL that encapsulates common structures among the tasks using orthogonal representations to promote diversity. Our method, named Mixture Of Orthogonal Experts (MOORE), leverages a Gram-Schmidt process to shape a shared subspace of representations generated by a mixture of experts. When task-specific information is provided, MOORE generates relevant representations from this shared subspace. We assess the effectiveness of our approach on two MTRL benchmarks, namely MiniGrid and MetaWorld, showing that MOORE surpasses related baselines and establishes a new state-of-the-art result on MetaWorld.

@article{moore, url = {https://arxiv.org/pdf/2311.11385.pdf}, author = {Hendawy, A. and Peters, J. and D'Eramo, C.}, title = {Multi-Task Reinforcement Learning with Mixture of Orthogonal Experts}, journal = {International Conference on Learning Representations (ICLR)}, year = {2024}, }

2023

-

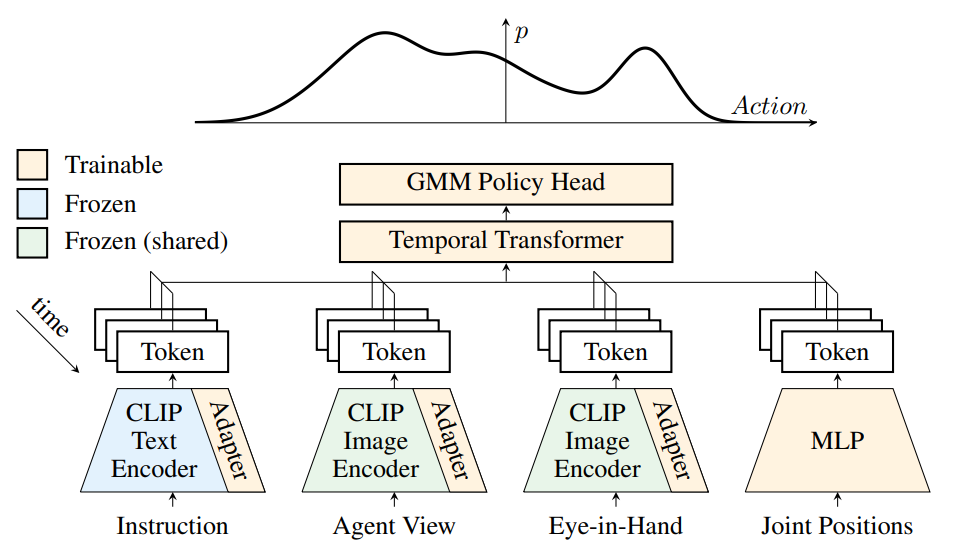

Parameter-efficient Tuning of Pretrained Visual-Language Models in Multitask Robot LearningM. Mittenbuehler , A. Hendawy , C. D’Eramo , and 1 more author2023

Parameter-efficient Tuning of Pretrained Visual-Language Models in Multitask Robot LearningM. Mittenbuehler , A. Hendawy , C. D’Eramo , and 1 more author2023Multimodal pretrained visual-language models (pVLMs) have showcased excellence across several applications, like visual question-answering. Their recent application for policy learning manifested promising avenues for augmenting robotic capabilities in the real world. This paper delves into the problem of parameter-efficient tuning of pVLMs for adapting them to robotic manipulation tasks with low-resource data. We showcase how Low-Rank Adapters (LoRA) can be injected into behavioral cloning temporal transformers to fuse language, multi-view images, and proprioception for multitask robot learning, even for long-horizon tasks. Preliminary results indicate our approach vastly outperforms baseline architectures and tuning methods, paving the way toward parameter-efficient adaptation of pretrained large multimodal transformers for robot learning with only a handful of demonstrations.

@article{corl_leap_paper, url = {https://openreview.net/pdf?id=UsY1YvsPzK}, author = {Mittenbuehler, M. and Hendawy, A. and D'Eramo, C. and Chalvatzaki, G.}, title = {Parameter-efficient Tuning of Pretrained Visual-Language Models in Multitask Robot Learning}, publisher = {CoRL 2023 Workshop on Learning Effective Abstractions for Planning (LEAP)}, year = {2023}, } -

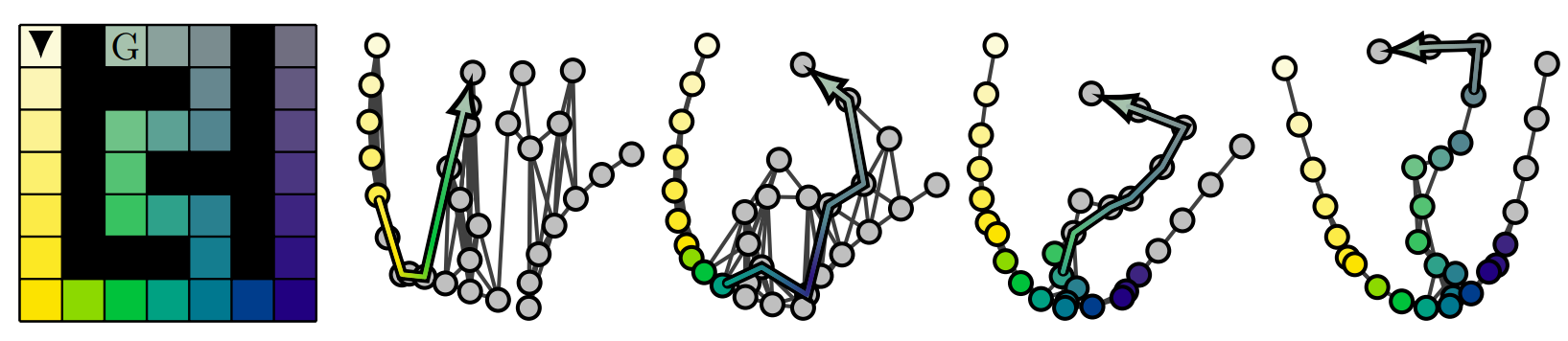

Using Proto-Value Functions for Curriculum Generation in Goal-Conditioned RLH. Metternich , A. Hendawy , P. Klink , and 2 more authors2023

Using Proto-Value Functions for Curriculum Generation in Goal-Conditioned RLH. Metternich , A. Hendawy , P. Klink , and 2 more authors2023In this paper, we investigate the use of Proto Value Functions (PVFs) for measuring the similarity between tasks in the context of Curriculum Learning (CL). PVFs serve as a mathematical framework for generating basis functions for the state space of a Markov Decision Process (MDP). They capture the structure of the state space manifold and have been shown to be useful for value function approximation in Reinforcement Learning (RL). We show that even a few PVFs allow us to estimate the similarity between tasks. Based on this observation, we introduce a new algorithm called Curriculum Representation Policy Iteration (CRPI) that uses PVFs for CL, and we provide a proof of concept in a Goal-Conditioned Reinforcement Learning (GCRL) setting.

@article{crpi, url = {https://openreview.net/pdf?id=DYd2q5wufn}, author = {Metternich, H. and Hendawy, A. and Klink, P. and Peters, J. and D'Eramo, C.}, title = {Using Proto-Value Functions for Curriculum Generation in Goal-Conditioned RL}, publisher = {NeurIPS 2023 Workshop on Goal-Conditioned Reinforcement Learning}, year = {2023}, } - WACVTowards Discriminative and Transferable One-Stage Few-Shot Object DetectorsK. Guirguis , M. Abdelsamad , G. Eskandar , and 4 more authors2023

Recent object detection models require large amounts of annotated data for training a new classes of objects. Few-shot object detection (FSOD) aims to address this problem by learning novel classes given only a few samples. While competitive results have been achieved using two-stage FSOD detectors, typically one-stage FSODs underperform compared to them. We make the observation that the large gap in performance between two-stage and one-stage FSODs are mainly due to their weak discriminability, which is explained by a small post-fusion receptive field and a small number of foreground samples in the loss function. To address these limitations, we propose the Few-shot RetinaNet (FSRN) that consists of: a multi-way support training strategy to augment the number of foreground samples for dense meta-detectors, an early multi-level feature fusion providing a wide receptive field that covers the whole anchor area and two augmentation techniques on query and source images to enhance transferability. Extensive experiments show that the proposed approach addresses the limitations and boosts both discriminability and transferability. FSRN is almost two times faster than two-stage FSODs while remaining competitive in accuracy, and it outperforms the state-of-the-art of one-stage meta-detectors and also some two-stage FSODs on the MS-COCO and PASCAL VOC benchmarks.

@article{tdtosfsod, url = {https://arxiv.org/abs/2210.05783}, author = {Guirguis, K. and Abdelsamad, M. and Eskandar, G. and Hendawy, A. and Kayser, M. and Yang, B. and Beyerer, J.}, title = {Towards Discriminative and Transferable One-Stage Few-Shot Object Detectors}, publisher = {Winter Conference on Applications of Computer Vision (WACV) 2023}, year = {2023}, copyright = {arXiv.org perpetual, non-exclusive license}, }

2022

- L3D-IVUCFA: Constraint-based Finetuning Approach for Generalized Few-Shot Object DetectionK. Guirguis , A. Hendawy , G. Eskandar , and 3 more authors2022

Few-shot object detection (FSOD) seeks to detect novel categories with limited data by leveraging prior knowledge from abundant base data. Generalized few-shot object detection (G-FSOD) aims to tackle FSOD without forgetting previously seen base classes and, thus, accounts for a more realistic scenario, where both classes are encountered during test time. While current FSOD methods suffer from catastrophic forgetting, G-FSOD addresses this limitation yet exhibits a performance drop on novel tasks compared to the state-of-the-art FSOD. In this work, we propose a constraint-based finetuning approach (CFA) to alleviate catastrophic forgetting, while achieving competitive results on the novel task without increasing the model capacity. CFA adapts a continual learning method, namely Average Gradient Episodic Memory (A-GEM) to G-FSOD. Specifically, more constraints on the gradient search strategy are imposed from which a new gradient update rule is derived, allowing for better knowledge exchange between base and novel classes. To evaluate our method, we conduct extensive experiments on MS-COCO and PASCAL-VOC datasets. Our method outperforms current FSOD and G-FSOD approaches on the novel task with minor degeneration on the base task. Moreover, CFA is orthogonal to FSOD approaches and operates as a plug-and-play module without increasing the model capacity or inference time.

@article{cfa, url = {https://arxiv.org/abs/2204.05220}, author = {Guirguis, K. and Hendawy, A. and Eskandar, G. and Abdelsamad, M. and Kayser, M. and Beyerer, J.}, title = {CFA: Constraint-based Finetuning Approach for Generalized Few-Shot Object Detection}, publisher = {Workshop on Learning with Limited Labelled Data for Image and Video Understanding (L3D-IVU)}, year = {2022}, copyright = {arXiv.org perpetual, non-exclusive license}, }

2021

- Uni StuttgartConstraint-based Optimization Approach for Generalized Few-Shot Object DetectionA. HendawyNov 2021

@book{msc_thesis, title = {Constraint-based Optimization Approach for Generalized Few-Shot Object Detection}, author = {Hendawy, A.}, year = {2021}, month = nov, publisher = {University of Stuttgart,} } - Uni StuttgartMaterial Identification using MIMO Radars in Non-contact Dynamical EnvironmentsA. HendawyApr 2021

Identifying the material type of objects is one of the assets for a robust autonomous system. This can be exemplified by an autonomous vehicle changes its speed given the material of the ground, a robot vacuum switches between different operating modes based on the type of the floor, or a rescuing robot detects the existence of humans under building debris. Computer Vision (CV) is succeeding to outperform its peers in many real-life challenges. Although Convolution Neural Network (CNN) achieves outstanding performance for material classification given images, it is limited to suitable lightening conditions, distinguishable object textures, and unblocked objects. Given those limitations, images are no longer the appropriate input for material classification. On the other hand, recent works utilize the Intermediate Frequency (IF) radar signals for material classification to tackle the former limitations. The IF signals are high variance in their nature as a result of many factors like oscillation or relative distance of the object in front of the radar. Different preprocessing techniques have been used for having robust and low variant data fed to a Neural Network (NN) model. However, a noticeable delay arises during inference. Therefore, we propose a radar-based material classifier which deals with IF signals yet is robust against its high variance. Moreover, former works succeed in classifying various material types using the IF signals. However, the classification setting requires the object to be in contact with the radar. The burden of non-contact classification has never been tackled by any former Deep Learning (DL) work. Accordingly, a new learning setting is proposed to map the high variant non-contact input domain to a low variant input domain as in the contact case. This approach treats the effect of the distance as a noise that can be denoised by an appropriate architecture. A modified version of WaveNet is adopted as our denoising architecture. As a result, a low intra-variance manifold of each class is formed, which can be easily classified using a shallow NN. Since a public radar-based dataset for material classification is not available for training and evaluation. To have a well- defined benchmark, we present a radar-based material classification dataset collected using a 24 GHz Millimeter-wave (mmWave) radar. Since this is the first work to tackle the material classification problem in the non-contact case, we lack a former work to compare against. Thus, we adopt a well-known image classification family, ResNet [1] to be our baseline. Our approach outperforms the classification baseline in terms of test accuracy. In addition, robust performance is shown in a real-life scenario.

@book{rp_thesis, title = {Material Identification using MIMO Radars in Non-contact Dynamical Environments}, author = {Hendawy, A.}, year = {2021}, month = apr, publisher = {University of Stuttgart,} }

2018

- TUMA Hybrid Approach for Constrained Deep Reinforcement LearningA. HendawyJul 2018

Recently, deep reinforcement learning techniques have achieved tangible results for learning high dimensional control tasks. Due to the trial and error interaction between the autonomous agent and the environment, the learning phase is unconstrained and limited to the simulator. Such exploration has an additional drawback of consuming unnecessary samples at the beginning of the learning process. Model- based algorithms, on the other hand, handle this issue by learning the dynamics of the environment. However, model-free algorithms have a higher asymptotic performance than the model-based one. Our contribution is to construct a hybrid structured algorithm, that makes use of the benefits of both methods, to satisfy constraint conditions throughout the learning process. We demonstrate the validity of our approach by learning a reachability task. The results show complete satisfaction for the constraint condition, represented by a static obstacle, with less number of samples and higher performance compared to state-of-the-art model-free algorithms.

@book{bsc_thesis, title = {A Hybrid Approach for Constrained Deep Reinforcement Learning}, author = {Hendawy, A.}, year = {2018}, month = jul, publisher = {TUM,}, }